My Earth Elevator VR experiment for the Leap Motion 3D Jam was highlighted on their blog in the Education group today.

I am fascinated by using the Rift and Leap for immersive learning and it is very fun to see what others are doing. Other entries that look interesting include VRΩ by Vragments and ChemGrabLab by Christine Hart.

VRiΩ is a VR experience where you shrink down inside a human body and wander through a respiratory tract to fight cancer cells and ChemGrabLab uses the Leap to allow you to interact with some of the more hazardous substances available from the safety of your armchair.

Articles related to virtual reality with a focus on software development, by the authors of Oculus Rift in Action.

Tuesday, December 15, 2015

Sunday, November 1, 2015

LEAP Motion 3D Jam Entry

I'm working on an entry for the Leap Motion 3D Jam. The Earth Elevator is an educational VR experience where you take an elevator to the center to the earth. The elevator makes several stops along the way to provide you with information about each layer and time to look around.

This project builds on other work I've covered on this blog in these posts: Using Unity native VR, Re-centering the avatar and knowing when the health and safety warning has been dismissed, Seeing your hands in VR , Hand selection UI prototype, Raising your hand, and Thought bubbles in a Rift scene using world space canvases.

This demo requires a Rift DK2, a LEAP Motion, Windows 8 or later, and Oculus Runtime 0.7. If you want to try it out, you can download it from the 3D Jam web site.

I'm considering adding a table with samples from each layer that you can pick up and look at a bit more closely. Don't know if I can get it done in time for the jam, though.

This project builds on other work I've covered on this blog in these posts: Using Unity native VR, Re-centering the avatar and knowing when the health and safety warning has been dismissed, Seeing your hands in VR , Hand selection UI prototype, Raising your hand, and Thought bubbles in a Rift scene using world space canvases.

This demo requires a Rift DK2, a LEAP Motion, Windows 8 or later, and Oculus Runtime 0.7. If you want to try it out, you can download it from the 3D Jam web site.

I'm considering adding a table with samples from each layer that you can pick up and look at a bit more closely. Don't know if I can get it done in time for the jam, though.

Thursday, October 1, 2015

Unity 5.2.1: Three quick tips for improving the VR user experience

For demos that I work on, there are three simple things that I always do to create a better user experience:

If you are a regular reader of this blog, you know this isn't the first time I've covered these topics. However, now that Unity includes native VR support I wanted to revisit these tips and show how to do them with Unity 5.2.1, the 0.7 runtime, and the Oculus Utilities for Unity 0.1-beta package.

The Oculus Utilities for Unity 0.1-beta package provides a way to check to see if the HSW is still displayed:

OVRManager.isHSWDisplayed

This returns true when the HSW is displayed and false when it is not. Note that if you are running the application in the Editor, you won't see the HSW in the Editor window, however, the HSW will appear in the Rift view.

For now, I’ll just stress the importance of creating a profile in the documentation. If anyone knows how to get the current user name, please comment!

- make sure the Oculus health and safety warning (HSW) has been dismissed before any other information is displayed

- give the user the option to re-center their avatar after they’ve put the headset on and settled in a comfortable position

- encourage the user to create a profile

If you are a regular reader of this blog, you know this isn't the first time I've covered these topics. However, now that Unity includes native VR support I wanted to revisit these tips and show how to do them with Unity 5.2.1, the 0.7 runtime, and the Oculus Utilities for Unity 0.1-beta package.

Knowing when the HSW has been dismissed

The HSW is a big rectangle centered in front of the user. While it is semi-transparent, it blocks the user’s view significantly. For that reason, I like to make sure that is has been dismissed before displaying anything the user needs to interact with.The Oculus Utilities for Unity 0.1-beta package provides a way to check to see if the HSW is still displayed:

OVRManager.isHSWDisplayed

This returns true when the HSW is displayed and false when it is not. Note that if you are running the application in the Editor, you won't see the HSW in the Editor window, however, the HSW will appear in the Rift view.

Re-centering the avatar

It is helpful to give users the option to re-center their virtual selves and this is one of the functions available as part of the Unity native VR implementation.

To re-center, add the VR name space and use:

VR.InputTracking.Recenter()

VR.InputTracking.Recenter()

Encouraging the user to create a profile

If the user has not created a profile, the user may experience discomfort because the default IPD or height is different from their own. In previous versions, you could get the name of the profile in use, and if the name returned was "default," you would know that the user had not created a profile. Unfortunately, I’m not seeing a way to do that with the Oculus Utilities for Unity 0.1-beta package. I tried using OVRManager.profile.username, but it returns "Oculus User" and not the name from the user profile. Looking at OVRProfile, it appears that only ipd, eyeHeight, and eyeDepth are retrieved from the current user profile (Note: you can get the user's eye height and IPD using OVRManager.profile.eyeHeight and OVRManager.profile.ipd.)

For now, I’ll just stress the importance of creating a profile in the documentation. If anyone knows how to get the current user name, please comment!

Friday, September 11, 2015

Quick Look: Using Unity 5.2 native VR support to create a scene for the Rift

Unity 5.2 came out before I had time to write a 5.1 native VR post. With the book out, I can now get back to writing blog posts. Here's what happened when I took a look at using Unity 5.2 native VR support to create scenes for the Rift.

I downloaded Unity 5.2 and gave it a quick trial run with the DK2, the Oculus Runtime 0.7.0-beta, and the Oculus Utilities for Unity 5 0.1.0-beta package. As you might have guessed from the runtime version, this test was Windows only. To test it all out, I built a quick sample scene using assets found in the Unity standard asset packages, enabled native VR to get the scene on the Rift, added a player character, and then did a test build and run.

To run the scene on the Rift, I selected Edit > Project Settings > Player > Other Settings and made sure that Virtual Reality Supported was checked.

That done, with the Rift plugged in, I pressed Play in the Editor and was able to see the scene both in the Editor window and on the Rift (with the 0.7 runtime and Unity 5.2, direct mode works in the Editor). As I moved the headset around, I could see that head tracking was enabled and with the Rift on, I could look anywhere I wanted in the scene. Two differences to note between the Editor view and the Rift view: first, the Editor preview is monoscopic and does not include lens correction, and second, the Oculus Health and Safety warning is visible in the Rift view, but not visible in the Editor window.

Quaternion To Matrix conversion failed because input Quaternion is invalid {0.000000, 0.000000, 0.000000, 0.000000} l=0.000000

UnityEngine.Matrix4x4:TRS(Vector3, Quaternion, Vector3)

OVRTrackerBounds:Update() (at Assets/OVR/Scripts/Util/OVRTrackerBounds.cs:151)

Looking on the forums, this does not appear to be a serious issue, and word is it should be fixed in an upcoming release.

I downloaded Unity 5.2 and gave it a quick trial run with the DK2, the Oculus Runtime 0.7.0-beta, and the Oculus Utilities for Unity 5 0.1.0-beta package. As you might have guessed from the runtime version, this test was Windows only. To test it all out, I built a quick sample scene using assets found in the Unity standard asset packages, enabled native VR to get the scene on the Rift, added a player character, and then did a test build and run.

Enabling VR and getting the scene on the Rift

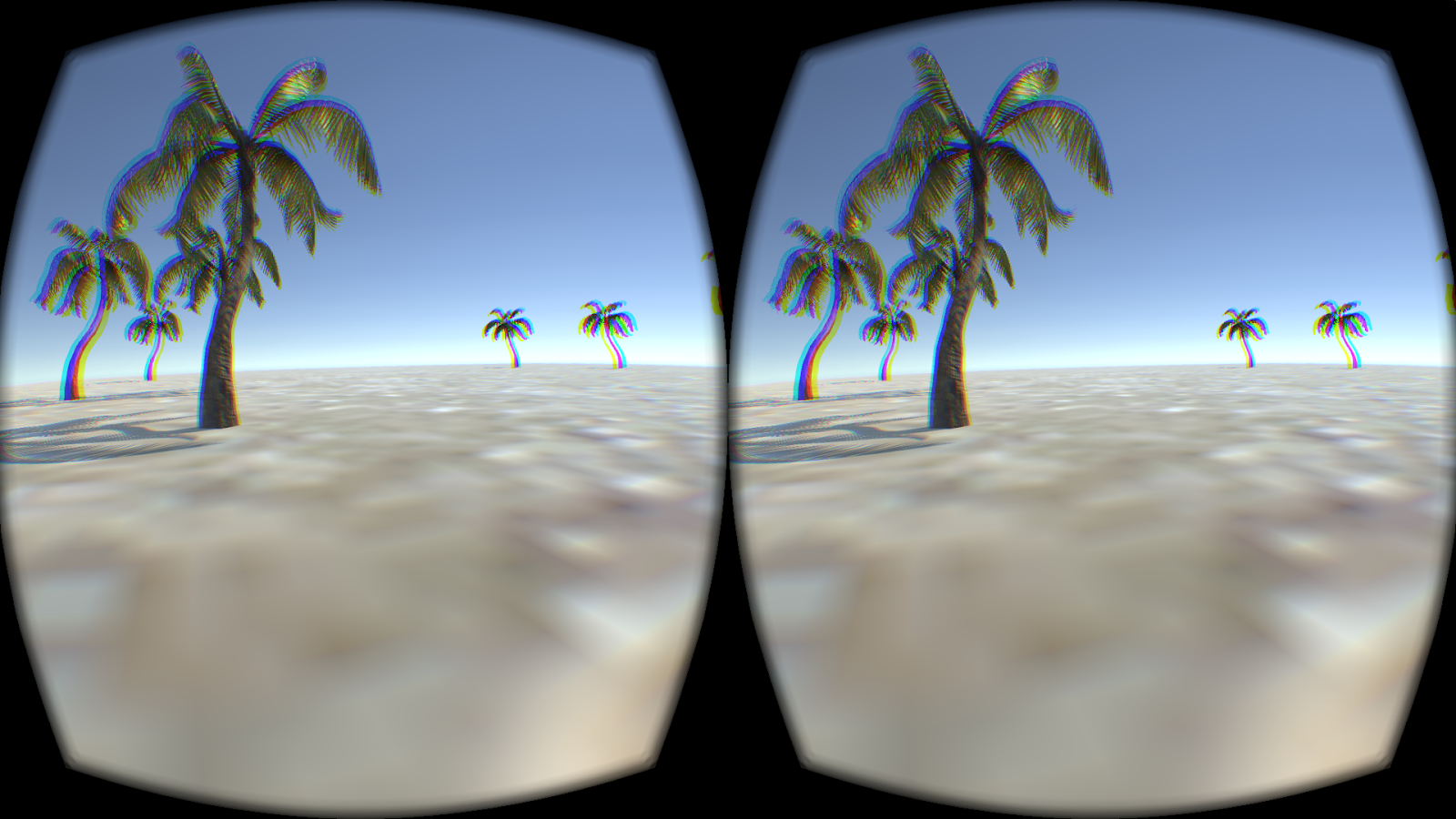

I used the same sample scene I’ve used in all my previous tests, a simple beach scene with a few palm trees created using assets from the Unity standard asset Environment package along with a single camera located at 0, 1, 0. |

| The example scene |

To run the scene on the Rift, I selected Edit > Project Settings > Player > Other Settings and made sure that Virtual Reality Supported was checked.

|

| Player Settings for VR |

That done, with the Rift plugged in, I pressed Play in the Editor and was able to see the scene both in the Editor window and on the Rift (with the 0.7 runtime and Unity 5.2, direct mode works in the Editor). As I moved the headset around, I could see that head tracking was enabled and with the Rift on, I could look anywhere I wanted in the scene. Two differences to note between the Editor view and the Rift view: first, the Editor preview is monoscopic and does not include lens correction, and second, the Oculus Health and Safety warning is visible in the Rift view, but not visible in the Editor window.

With only a camera in the scene and no scripts for navigation, I couldn’t move anywhere. So, my next step was to add a player character.

Adding a player character to navigate the scene

The first option for quickly adding a player character I looked at was to use the first-person player character prefab from the Unity standard assets Characters package. After downloading this package, I simply dragged the FPSController prefab onto the scene and pressed play. This worked, but even though I've been using VR for a while and have my "VR Legs," I still found the default speeds for this controller fairly uncomfortable.

After a break, I took a look at a second option: using the first-person player character prefab (OVRPlayerController) found in the Oculus Utilities for Unity package. This package contains a variety of scripts and utilities that can be used to improve the VR experience (which I’ll be looking at in future posts) along with the first-person player character prefab.

The OVRPlayerController prefab from the Oculus Utilities for Unity package is a basic character controller that uses A, S, D, W controls. The default speeds were slower than the first option I tried and, for me, were much more comfortable to use. In addition, like versions of this prefab from the older integration package, it includes a menu of diagnostic information you can view by pressing the space bar.

The OVRPlayerController prefab from the Oculus Utilities for Unity package is a basic character controller that uses A, S, D, W controls. The default speeds were slower than the first option I tried and, for me, were much more comfortable to use. In addition, like versions of this prefab from the older integration package, it includes a menu of diagnostic information you can view by pressing the space bar.

Using this menu, I saw that I was easily getting 75 FPS for my sample scene. A good start. One issue I noticed though, was that while running the scene with the player prefab from the Oculus Utilities for Unity package, the console would sometimes be spammed with following error:

Quaternion To Matrix conversion failed because input Quaternion is invalid {0.000000, 0.000000, 0.000000, 0.000000} l=0.000000

UnityEngine.Matrix4x4:TRS(Vector3, Quaternion, Vector3)

OVRTrackerBounds:Update() (at Assets/OVR/Scripts/Util/OVRTrackerBounds.cs:151)

Looking on the forums, this does not appear to be a serious issue, and word is it should be fixed in an upcoming release.

Now that I had a VR scene that I could navigate, the next step was to do a test build and run.

I first tried just selecting File > Build Settings > Build and Run but this didn't work as the application failed to run. I then built the application and ran the executable by clicking on it and that worked fine.

Building and running the test application

In previous versions, building a VR application resulted in two executables: <application>_DirectToRift.exe and <application>.exe. Now there is just one: <application>.exe.I first tried just selecting File > Build Settings > Build and Run but this didn't work as the application failed to run. I then built the application and ran the executable by clicking on it and that worked fine.

Wednesday, August 19, 2015

Code Liberation Foundation: Unity-3D UI with Oculus Rift (Lecture + Workshop) - Sept 5

I am excited to announce that I'll be teaching a class on Unity-3D UI and the Oculus Rift on September 5th for the Code Liberation Foundation.

The Code Liberation Foundation offers free/low cost development workshops in order to facilitate the creation of video game titles by women. Code Liberation events are trans-inclusive and women-only. Women of all skill levels and walks of life are invited to attend.

The Code Liberation Foundation offers free/low cost development workshops in order to facilitate the creation of video game titles by women. Code Liberation events are trans-inclusive and women-only. Women of all skill levels and walks of life are invited to attend.

If you are interested in VR, identify as a woman, and are in the NY area, I'd love to see you there. Space is limited, so sign up today!

Tuesday, August 18, 2015

Wednesday, July 29, 2015

Working with the Rift is changing how I dream

The first video game influenced dream I remember having was back in the late eighties. I was obsessed with Tetris and had very vivid dreams of Tetris blocks falling on me. So, it isn’t surprising to me that playing video games can change the way you dream. That said, the specific effect that working with the Rift has had on my dreams did surprise me.

When using the Rift, you are sitting in place and the the world moves around or past you instead of like real-life where you move though the world. I find now in my dreams, no matter what I am dreaming about, there are now two kinds of movement - movement where I dream I am moving through a world and movement where I am still and the world moves around or past me. Perhaps, this kind of dreaming is an attempt by my brain to make Rift movement feel more natural to me? Anyone else dreaming like this?

When using the Rift, you are sitting in place and the the world moves around or past you instead of like real-life where you move though the world. I find now in my dreams, no matter what I am dreaming about, there are now two kinds of movement - movement where I dream I am moving through a world and movement where I am still and the world moves around or past me. Perhaps, this kind of dreaming is an attempt by my brain to make Rift movement feel more natural to me? Anyone else dreaming like this?

Tuesday, June 23, 2015

Unity + Leap: Explicit instruction and hand gestures

I have been experimenting a bit with the LEAP and looking at getting objects into the user’s hand. In one of my experiments*, the user holds their hand out flat and a fairy appears. This experiment used explicit instruction written on menus to tell the user what to do.

Explicit instruction worked in that my test users did what I wanted them to - nod and hold their hand out flat. The downside, of course, is that it required them to read instructions which isn’t very immersive or fun. In future experiments, I want to look at implicit instruction, such as having non-player characters perform actions first.

* This demo is now available from the Leap Motion Gallery.

You can find all of the hand models in a scene using:

HandModel[] userHands = handcontroller.GetAllPhysicsHands();

To know if the hand is palm up, you can get the normal vector projecting from the hand relative to the controller, using:

userHands[0].GetPalmNormal()

To know if the hand is open or closed, you can look at the hand’s grab strength. The strength is zero for an open hand, and blends to 1.0 when a grabbing hand pose is recognized.

userHands[0].GetLeapHand().GrabStrength

To know where to place the object, you can get the palm position (relative to the controller), using:

userHands[0].GetPalmPosition()

Explicit instruction worked in that my test users did what I wanted them to - nod and hold their hand out flat. The downside, of course, is that it required them to read instructions which isn’t very immersive or fun. In future experiments, I want to look at implicit instruction, such as having non-player characters perform actions first.

* This demo is now available from the Leap Motion Gallery.

Notes on getting an object to appear on a user’s hand

Some quick notes on getting the fairy to appear on the user’s hand:You can find all of the hand models in a scene using:

HandModel[] userHands = handcontroller.GetAllPhysicsHands();

To know if the hand is palm up, you can get the normal vector projecting from the hand relative to the controller, using:

userHands[0].GetPalmNormal()

To know if the hand is open or closed, you can look at the hand’s grab strength. The strength is zero for an open hand, and blends to 1.0 when a grabbing hand pose is recognized.

userHands[0].GetLeapHand().GrabStrength

To know where to place the object, you can get the palm position (relative to the controller), using:

userHands[0].GetPalmPosition()

Wednesday, June 3, 2015

Unity + Leap: Raising Your Hand to Get a Character's Attention

A common interaction in real life is to raise your hand to get someone’s attention. We do it when we are meeting someone in a crowd to help them find us, we do it when we are at school to get the teacher’s attention, and we do it as parents to get our child’s attention so they know that we are there and watching. We also do it when we want to hail a cab or make a bid at an auction. It is a simple enough interaction that babies do it almost instinctively. As simple as raising your hand is, using it as a mechanic in a VR environment brings up some interesting questions. How high should the user raise their hand to trigger the interaction? How long does the user need to have their hand raised? And, what should happen if the application loses hand tracking?

To experiment with this interaction, I created a demo consisting of a single character idling, minding his own business. When the user raises their hand, the character waves back and a speech bubble appears saying “Hello, there!”

Let’s take a look at the demo setup and then look at how testing the user experience went.

For the character animation, I used the Idle and Wave animations from the Raw Mocap data package for Macanim by Unity Technologies (free animations created from motion capture data) and I created an animation controller for the character to control when he is idling and when he waves back at you. The animation controller has two animation states, Idle and Wave. It also has two triggers that can be used to trigger the transition between each state:

And, of course, I wrote a script (wavinghello.cs) to detect when the user has raised their hand. The interesting bit of this script is how you know where the user’s hands are and how you know when a hand has been raised high enough so that you can trigger the appropriate animation. Let's take a look at the script's Update() function:

void Update () {

HandModel[] userHands = handController.GetAllPhysicsHands();

if (userHands.Length > 0){

foreach (HandModel models in userHands){

if (models.GetPalmPosition().y >= centerEyeAnchor.transform.position.y){

anim.SetTrigger("Wave");

changeMenuDisplay(speechbubble, 1);

} else {

anim.SetTrigger("Idle");

changeMenuDisplay(speechbubble, 0);

}

}

} else {

anim.SetTrigger("Idle");

changeMenuDisplay(speechbubble, 0);

}

}

To get the all of the hands in the scene, the script uses GetAllPhysicsHands()

from HandController.cs:

HandModel[] userHands = handController.GetAllPhysicsHands();

GetAllPhysicsHands() returns an array of all Leap physics HandModels for the specified HandController. To get each hand's position, the script uses GetPalmPosition() which returns the Vector3 position of the HandModel relative to the HandController. The HandController is located at 0, 0, 0 relative to its parent object, the CenterEyeAnchor.

The CenterEyeAnchor object is used by the Oculus Rift integration scripts to maintain a position directly between the two eye cameras. As the cameras are the user’s eyes, if the Y value of a HandModel object's position is greater than the Y value of the centerEyeAnchor, we know the user's hand has been raised above eye level.

I then grabbed three people to do some testing. The only instruction I gave them was to “raise your hand to get the guy’s attention.” For my first user, the demo worked quite well. He raised his hand and the character waved back as expected. Great so far. My second user was resistant to raising his hand any higher than his nose. He quickly got frustrated as he could not get the guy’s attention. My third user raised his hand and then waved it wildly around so much so that the speech bubble flickered and was unreadable. Quite a range of results for only three users.

For my next iteration, I set the threshold for raising one’s hand a few centimeters below eye level.

models.GetPalmPosition().y >= centerEyeAnchor.transform.position.y - 0.03f

This worked for my second user as it was low enough that he would trigger the interaction, but not so low that he would accidentally trigger it.

I haven’t done anything to address the third user yet, but whatever I do, waving my hands like a maniac is now part of my my own testing checklist.

I’d love to hear if anyone else is using this type of mechanic and what their experiences are.

To experiment with this interaction, I created a demo consisting of a single character idling, minding his own business. When the user raises their hand, the character waves back and a speech bubble appears saying “Hello, there!”

Let’s take a look at the demo setup and then look at how testing the user experience went.

Setup

To create the scene I used basic 3D objects (planes, cubes) and a directional light to create a simple room. The character in the scene is a a rigged human character ("Carl") from the Male Character Pack by Mixamo. The speech bubble is created using a world space canvas (see: Thought Bubbles in a Rift scene). To get my hands in the scene, I used the LeapOVRPlayerController from the Leap Unity Core Assest v.2.2.4 (see: Seeing your hands in VR).For the character animation, I used the Idle and Wave animations from the Raw Mocap data package for Macanim by Unity Technologies (free animations created from motion capture data) and I created an animation controller for the character to control when he is idling and when he waves back at you. The animation controller has two animation states, Idle and Wave. It also has two triggers that can be used to trigger the transition between each state:

|

| The animation controller for the waving character has two states and two triggers. |

And, of course, I wrote a script (wavinghello.cs) to detect when the user has raised their hand. The interesting bit of this script is how you know where the user’s hands are and how you know when a hand has been raised high enough so that you can trigger the appropriate animation. Let's take a look at the script's Update() function:

void Update () {

HandModel[] userHands = handController.GetAllPhysicsHands();

if (userHands.Length > 0){

foreach (HandModel models in userHands){

if (models.GetPalmPosition().y >= centerEyeAnchor.transform.position.y){

anim.SetTrigger("Wave");

changeMenuDisplay(speechbubble, 1);

} else {

anim.SetTrigger("Idle");

changeMenuDisplay(speechbubble, 0);

}

}

} else {

anim.SetTrigger("Idle");

changeMenuDisplay(speechbubble, 0);

}

}

To get the all of the hands in the scene, the script uses GetAllPhysicsHands()

from HandController.cs:

HandModel[] userHands = handController.GetAllPhysicsHands();

GetAllPhysicsHands() returns an array of all Leap physics HandModels for the specified HandController. To get each hand's position, the script uses GetPalmPosition() which returns the Vector3 position of the HandModel relative to the HandController. The HandController is located at 0, 0, 0 relative to its parent object, the CenterEyeAnchor.

|

| The HandController is a child of the CenterEyeAnchor. |

|

| The HandController is located at 0, 0, 0 relative to its parent the CenterEyeAnchor. |

The CenterEyeAnchor object is used by the Oculus Rift integration scripts to maintain a position directly between the two eye cameras. As the cameras are the user’s eyes, if the Y value of a HandModel object's position is greater than the Y value of the centerEyeAnchor, we know the user's hand has been raised above eye level.

The user experience

When testing this demo I was looking at how high the user should raise their hand to trigger the interaction, how long the user should have their hand raised, and, what the application should do when it loses hand tracking. Initially, I went with what seemed comfortable for me. I required the users to raise their hand (measured from the center of their palm) to above eye level and I did not require the user's hand to be raised for any specific amount of time. If the Leap lost hand tracking, the application treated it as though all hands were below eye level.I then grabbed three people to do some testing. The only instruction I gave them was to “raise your hand to get the guy’s attention.” For my first user, the demo worked quite well. He raised his hand and the character waved back as expected. Great so far. My second user was resistant to raising his hand any higher than his nose. He quickly got frustrated as he could not get the guy’s attention. My third user raised his hand and then waved it wildly around so much so that the speech bubble flickered and was unreadable. Quite a range of results for only three users.

For my next iteration, I set the threshold for raising one’s hand a few centimeters below eye level.

models.GetPalmPosition().y >= centerEyeAnchor.transform.position.y - 0.03f

This worked for my second user as it was low enough that he would trigger the interaction, but not so low that he would accidentally trigger it.

I haven’t done anything to address the third user yet, but whatever I do, waving my hands like a maniac is now part of my my own testing checklist.

I’d love to hear if anyone else is using this type of mechanic and what their experiences are.

Labels:

DK2,

GUI,

leap motion,

oculus,

oculus rift,

rift,

UI,

unity,

usability,

virtual reality,

VR

Friday, May 15, 2015

Oculus SDK 0.6.0 and Oculus Rift in Action

Oculus publicly released version 0.6 their SDK today. This version represents the biggest change to the API since the first release of the C API in version 0.3, slightly over a year ago. The new version simplifies the API in a number of ways:

So those who have purchased or intend to purchase our book may be asking themselves what version we'll be covering exactly.

The short answer is that currently the book covers version 0.5 of the SDK (which itself is almost identical to version 0.4.4). That isn't likely to change before print. At this point we're well into the final stages of publication, doing final editorial passes for proofreading. If we were to update to 0.6 we would have to go back and revise quite a few chapters and start much of that work over again. That said, when we found out what a big change 0.6 was, there was much discussion, consideration and consternation.

However, with the public release of the SDK, there came a more compelling reason for us to stay with the version we're currently targeting. In a recent blog post on the Oculus website, Atman Binstock stated...

We believe that VR will also grow beyond the confines of gaming, probably in ways we can't even imagine right now. However, to do that, we need to make sure as many people as possible have the opportunity to try new ideas. And while Macs and Linux machines may hold an almost insignificant market share when it comes to gaming, we believe that new ideas can be found anywhere and that innovators probably aren't divided proportionately in the same way that operating systems are.

So we were faced with a choice. We could stick with the 0.5 SDK, which still functions with the new Oculus runtime (at least for the DK1 and DK2). We could abandon Linux and Mac developers and switch to the 0.6 SDK. Or we could try to cover both 0.6 and 0.5 in the book. We chose the first option.

Now, if you're a Windows developer and you want to move to 0.6, we still want to support you. To that end, we will have a branch of our Github examples that will keep up to date with the latest version of the SDK.

Additionally, we will try to cover the gaps between what the book teaches, and and the current version of the SDK with articles on this blog. I'm not talking about errata, but something more like whole updated chapter sections.

We will also be working to create examples focused on other mechanisms of working with VR devices, such as the Razer OSVR and the HTC Vive, using the appropriate SDKs.

We also feel that there is a great deal of value in the book as it stands now that is outside of the low level SDK details. In fact, to be honest, the bulk of the changes to the book would probably be in chapters 4 and 5, and there are a dozen chapters.

The point is, whatever platform you're on, whatever hardware you're working with, if you want to create a world, we want to help. The forthcoming edition of Oculus Rift in Action is only the first step.

- Removed the distinction between direct and extended modes from the perspective of the developer

- Removes support for client-side distortion

- Moves the management of the textures used for offscreen rendering from the client application to the SDK

- Fixes a number of OpenGL limitations

- etc, etc...

So those who have purchased or intend to purchase our book may be asking themselves what version we'll be covering exactly.

The short answer is that currently the book covers version 0.5 of the SDK (which itself is almost identical to version 0.4.4). That isn't likely to change before print. At this point we're well into the final stages of publication, doing final editorial passes for proofreading. If we were to update to 0.6 we would have to go back and revise quite a few chapters and start much of that work over again. That said, when we found out what a big change 0.6 was, there was much discussion, consideration and consternation.

However, with the public release of the SDK, there came a more compelling reason for us to stay with the version we're currently targeting. In a recent blog post on the Oculus website, Atman Binstock stated...

Our development for OS X and Linux has been paused in order to focus on delivering a high quality consumer-level VR experience at launch across hardware, software, and content on Windows. We want to get back to development for OS X and Linux but we don’t have a timeline.Since we started writing the book, we've felt very strongly about keeping a cross-platform focus. While it's likely that most of the initial consumer demand for the Rift will be driven by gamers, we the authors feel that VR has a promising future beyond just the realms of gaming. Two of the core components that make the Rift possible are powerful consumer graphics processors and cheap, accurate inertial measurement units (IMUs). Much of the initial consumer demand for both of these technologies was driven by gaming, but in both cases the use of the technologies is spreading far beyond that.

We believe that VR will also grow beyond the confines of gaming, probably in ways we can't even imagine right now. However, to do that, we need to make sure as many people as possible have the opportunity to try new ideas. And while Macs and Linux machines may hold an almost insignificant market share when it comes to gaming, we believe that new ideas can be found anywhere and that innovators probably aren't divided proportionately in the same way that operating systems are.

So we were faced with a choice. We could stick with the 0.5 SDK, which still functions with the new Oculus runtime (at least for the DK1 and DK2). We could abandon Linux and Mac developers and switch to the 0.6 SDK. Or we could try to cover both 0.6 and 0.5 in the book. We chose the first option.

Now, if you're a Windows developer and you want to move to 0.6, we still want to support you. To that end, we will have a branch of our Github examples that will keep up to date with the latest version of the SDK.

Additionally, we will try to cover the gaps between what the book teaches, and and the current version of the SDK with articles on this blog. I'm not talking about errata, but something more like whole updated chapter sections.

We will also be working to create examples focused on other mechanisms of working with VR devices, such as the Razer OSVR and the HTC Vive, using the appropriate SDKs.

We also feel that there is a great deal of value in the book as it stands now that is outside of the low level SDK details. In fact, to be honest, the bulk of the changes to the book would probably be in chapters 4 and 5, and there are a dozen chapters.

The point is, whatever platform you're on, whatever hardware you're working with, if you want to create a world, we want to help. The forthcoming edition of Oculus Rift in Action is only the first step.

Monday, May 11, 2015

Book Update!

We are heading into the final stretch with our book Oculus Rift in Action. All chapters are now complete and we are just about half-way through the final editing process. The book is available for pre-order from Amazon. If you can't wait to get your hands on it, you can order directly from Manning Publications and get access to all chapters now through the Manning Early Access Program.

Thursday, April 2, 2015

Unity + Leap: Hand Selection UI Prototype

Immersion is definitely affected by how closely your avatar's hand looks like your own. In the demo I am working on I want the user to be able to select the hands they have in the game before entering the game. A prototype in-game UI for hand selection is seen in the video below.

To create this UI, I created a world space canvas and added buttons for each of the available hands. To each button, I added a box collider as a child object. A script attached to the box collider detects when a hand has collided with it. To detect a hand, I used the Leap libraries and then checked to see if the collision object is a Leap HandModel.

In this prototype UI, I am using large buttons for two reasons. First, reading small text in the Rift can be difficult, and second, while using the Leap allows me to see my hands, in my experience, it does not track finger motion well enough for detailed interactions to be effective. In several of the tests I ran, the user's hand was generally in the right place but the fingers more often than not were at different angles than the user's actual hand. The effect was that my users seemed to have the fine motor skills of a toddler - they could reach out and touch everything but they didn't have a lot of control. On the positive side, when the user has hands in the game, it appears to be very natural for users to try to touch items with their hands. Even when users don't have visible hands in the scene, you'll often see them reaching out to try to touch things. While I have the start button say "Touch to Start," once users know to use their hand to affect the scene they get it right away and don't need prompting or other instruction.

Leap Motion has just released a "Best Practices Guide" and I'll be looking at incorporating many of the ideas documented there in future prototypes.

To create this UI, I created a world space canvas and added buttons for each of the available hands. To each button, I added a box collider as a child object. A script attached to the box collider detects when a hand has collided with it. To detect a hand, I used the Leap libraries and then checked to see if the collision object is a Leap HandModel.

In this prototype UI, I am using large buttons for two reasons. First, reading small text in the Rift can be difficult, and second, while using the Leap allows me to see my hands, in my experience, it does not track finger motion well enough for detailed interactions to be effective. In several of the tests I ran, the user's hand was generally in the right place but the fingers more often than not were at different angles than the user's actual hand. The effect was that my users seemed to have the fine motor skills of a toddler - they could reach out and touch everything but they didn't have a lot of control. On the positive side, when the user has hands in the game, it appears to be very natural for users to try to touch items with their hands. Even when users don't have visible hands in the scene, you'll often see them reaching out to try to touch things. While I have the start button say "Touch to Start," once users know to use their hand to affect the scene they get it right away and don't need prompting or other instruction.

Leap Motion has just released a "Best Practices Guide" and I'll be looking at incorporating many of the ideas documented there in future prototypes.

Tuesday, March 24, 2015

Unity: Mac Direct to Rift Plugin by AltspaceVR

When using the Rift on a Mac, Oculus recommends using extended mode for the monitor configuration as it provides better performance. And while performance is indeed much better in extended mode (running the Tuscany demo in extended mode I get 75 FPS and in mirrored mode with the refresh rate set to 75 hertz I was get 46 FPS and with the refresh rate set to 60 I get 60 FPS), getting the app to run on the extended portion of the desktop can be a bit of a pain.

To help provide a better Mac user experience, the folks at AltspaceVR have created a plugin that can be integrated into your Unity project so that your application can be launched seamlessly on to the Rift when using a Mac. They have kindly made this project available on github.

Friday, March 6, 2015

Quick Look: Unity 5 and the Oculus Unity 4 Integration Package 0.4.4

I downloaded Unity 5 yesterday and gave it a quick trial run with the Oculus Integration Package 0.4.4 for the DK2. To test it out, I first built a quick sample scene using assets found in the Unity standard asset packages. Using that scene, I then tried two methods for getting the scene onto the Rift:

- Using the OVRPlayerController prefab

- Using the First Person Controller prefabs and scripts found in the Unity Standard Assets with the OVRCameraRig prefab

Creating the sample scene

I created a similar sample scene to the one I’ve been using for previous tests - a beach scene using only Unity standard assets. Unity 5 includes a significant refresh of the standard asset packages which is very cool. And nicely for me, they still include palm trees and a sand texture. One change of note is that skyboxes are now set in Window ->Lighting instead of Edit -> Render Settings. Unity 5 comes with a single default skybox which is what I used in this scene. Unity 5 doesn’t include a skyboxes standard asset package, at least not that I found. I did try using the SunnySky box material from the skyboxes package in 4.6 but it does not render nicely. |

| Beach scene created using Unity 5 standard assets Notice how much prettier the palm trees are compared to the 4.6 assets. |

Now to get the scene running in the Rift.

However, before I could build the scene, I needed to address the two errors I was getting:

Assets/OVR/Scripts/Util/OVRMainMenu.cs(250,43): error CS0117: `UnityEngine.RenderMode' does not contain a definition for `World'

Assets/OVR/Scripts/Util/OVRMainMenu.cs(969,43): error CS0117: `UnityEngine.RenderMode' does not contain a definition for `World'

To get the scene to build, I edited OVRMainMenu.cs and changed:

c.renderMode = RenderMode.World;

to

c.renderMode = RenderMode.WorldSpace;

in the two places where that line occurs. With that done, I was a able to build and run the scene on the DK 2.

Running this on a MacBook Pro in mirrored mode I was seeing 60 or so FPS, and in extended mode around 75.

*Actually, I didn’t make sure of that on the first test and at the start of the scene the collision caused the scene to jitter around and it was very unpleasant.

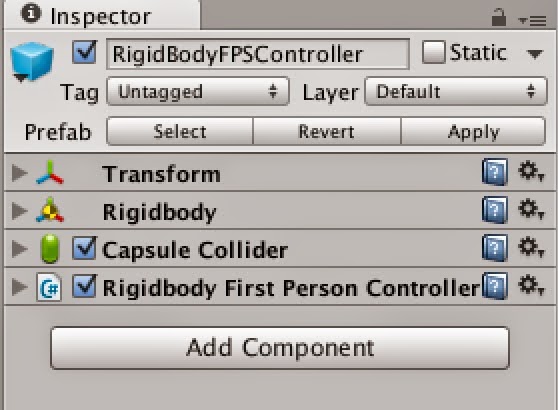

Looking a the RigidBodyFPSController object, you can see that it has a RigidBody, a Capsule Collider and the Rigidbody first person controller script.

To adapt this prefab for use in VR, I first deleted the MainCamera child object and then added the OVRCameraRig in its place.

Note: The MainCamera had a headbob.cs script attached to it. Head bob isn’t something I want in my VR application, and the documentation says that script can be safely disabled or removed.

The Rigidbody First Person Controller script’s Cam variable had been set to the MainCamera. With the MainCamera removed, in the inspector for the script I set it to LeftEyeAnchor.

I then gave the it a test run.

I was seeing similar FPS as in the OVRPlayerController test but the scene was noticeably more jittery. This may be due to using LeftEyeAnchor as the camera but it would require more research to know what is really going on.

Update: March 30, 2015

Using OVRPlayerController

After downloading and importing the Unity 4 Integration Package 0.4.4, the first thing I tried was just dropping the OVRPlayerController prefab into the scene. The OVRPlayerController character height is 2, so when placing the prefab in the scene I made sure to set the Y value to 1 so it was not colliding with the beach plane. And unlike 4.6, the palm tree assets have colliders attached, so I also made sure my player was placed so that it was not colliding with a palm tree.*However, before I could build the scene, I needed to address the two errors I was getting:

Assets/OVR/Scripts/Util/OVRMainMenu.cs(250,43): error CS0117: `UnityEngine.RenderMode' does not contain a definition for `World'

Assets/OVR/Scripts/Util/OVRMainMenu.cs(969,43): error CS0117: `UnityEngine.RenderMode' does not contain a definition for `World'

To get the scene to build, I edited OVRMainMenu.cs and changed:

c.renderMode = RenderMode.World;

to

c.renderMode = RenderMode.WorldSpace;

in the two places where that line occurs. With that done, I was a able to build and run the scene on the DK 2.

|

| Beach scene on the Rift |

Running this on a MacBook Pro in mirrored mode I was seeing 60 or so FPS, and in extended mode around 75.

*Actually, I didn’t make sure of that on the first test and at the start of the scene the collision caused the scene to jitter around and it was very unpleasant.

Using the OVRCameraRig with the first person character controller prefabs from the standard assets

My next test was to try to use the OVRCameraRig prefab with the first person character controller from the standard assets. This did not go as well. With 5.0, there are two First Person Player prefabs: FPSController and RigidbodyFPSController.The FPSController prefab

The FPSController prefab uses the FirstPersonController.cs script. This script has a number of options, including footstep sounds, head bob and FOV Kick. These options can be great in traditional games but for VR, they can be rather problematic. Head bob and FOV Kick are particularly concerning as these types of motion can be severe motion sickness triggers for some user. Based on that, I didn’t want to spend too much time trying to adapt this script. Instead, I looked at the RigidBodyFPSController.RigidBodyFPSController

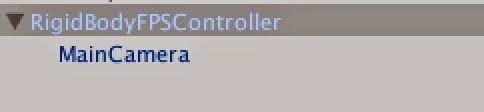

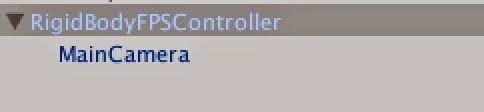

The RigidBodyFPSController prefab consists of the RigidBodyFPSController object with the MainCamera as a child object.

Looking a the RigidBodyFPSController object, you can see that it has a RigidBody, a Capsule Collider and the Rigidbody first person controller script.

To adapt this prefab for use in VR, I first deleted the MainCamera child object and then added the OVRCameraRig in its place.

Note: The MainCamera had a headbob.cs script attached to it. Head bob isn’t something I want in my VR application, and the documentation says that script can be safely disabled or removed.

The Rigidbody First Person Controller script’s Cam variable had been set to the MainCamera. With the MainCamera removed, in the inspector for the script I set it to LeftEyeAnchor.

I then gave the it a test run.

I was seeing similar FPS as in the OVRPlayerController test but the scene was noticeably more jittery. This may be due to using LeftEyeAnchor as the camera but it would require more research to know what is really going on.

Update: March 30, 2015

The build errors appear to be fixed in the 0.5.0.1 Beta version of the Integration Package. When using 0.5.0.1 you need make sure you have updated to the 0.5.0.1 Beta version of the Runtime Package for it to work. I was not able to build my project until I had updated the Runtime Package as well.

Friday, February 13, 2015

A look a the Leap Motion: Seeing your hands in VR

In many VR demos you are just a floating head in space. For me, this breaks the immersion as it makes me feel like I am not really part of the virtual world. Demos that include a body feel more immersive, but they are also a bit frustrating. I want my avatar’s hands to move when my hands do. To experiment with getting my hands into the scene, I got a Leap Motion controller.

When using the Leap with the Rift, you need to mount it on the Rift itself using a small plastic bracket. You can purchase the bracket from Leap but they also make the model available on Thingiverse so you can print one out yourself should you have a 3D printer. (I do and I thought that was very cool. I really felt like I was living in the future printing out a part for my VR system.)

Once I got the mount printed out and attached to my Rift and completed the Leap setup instructions, I gave some of the VR demos available a try. Seeing hands in the scene really made it feel a lot more immersive, but what really upped the immersion was seeing hands that looked almost like mine. The leap development package includes a nice variety of hand models (by their naming conventions, I’m a light salt) and that variety is greatly appreciated.

When running the demos, the biggest problems I had with the Leap were false positive hands (extra hands) in the scene, having my hands disappear rather suddenly, and poor tracking of my fingers. Two things that helped were making sure the Rift cables were not in front of the Leap controller and removing or covering reflective surfaces in my office (particularly the arm rest on my chair). Even with those changes, having the perfect office setup for the Leap is still a work in progress.

I’ve downloaded the Unity core assets and I’ll be talking more about developing for the Leap using Unity in future posts. Here’s a preview of what I am working on:

When using the Leap with the Rift, you need to mount it on the Rift itself using a small plastic bracket. You can purchase the bracket from Leap but they also make the model available on Thingiverse so you can print one out yourself should you have a 3D printer. (I do and I thought that was very cool. I really felt like I was living in the future printing out a part for my VR system.)

Once I got the mount printed out and attached to my Rift and completed the Leap setup instructions, I gave some of the VR demos available a try. Seeing hands in the scene really made it feel a lot more immersive, but what really upped the immersion was seeing hands that looked almost like mine. The leap development package includes a nice variety of hand models (by their naming conventions, I’m a light salt) and that variety is greatly appreciated.

When running the demos, the biggest problems I had with the Leap were false positive hands (extra hands) in the scene, having my hands disappear rather suddenly, and poor tracking of my fingers. Two things that helped were making sure the Rift cables were not in front of the Leap controller and removing or covering reflective surfaces in my office (particularly the arm rest on my chair). Even with those changes, having the perfect office setup for the Leap is still a work in progress.

I’ve downloaded the Unity core assets and I’ll be talking more about developing for the Leap using Unity in future posts. Here’s a preview of what I am working on:

Labels:

avatars,

hands,

leap motion,

oculus rift,

rift,

VR

Wednesday, February 4, 2015

Unity 4.6: Silent conversation - Detecting head gestures for yes and no

One of the demos that I have really enjoyed is the “Trial of the Rift Drifter” by Aldin Dynamics. In this demo you answer questions by shaking your head for yes and no. This is a great use of the head tracker data beyond changing the user’s point of view. And it is a mechanic that I would like to add to my own applications as it really adds to the immersive feel.

As an example, I updated the thought bubbles scene I created earlier to allow a silent conversation with one of the people in the scene and this blog post will cover exactly what I did.

In my scene, I used a world-space canvas to create the thought bubble. This canvas contains a canvas group (ThoughtBubble) which contains an image UI object and a text UI object.

I wanted the text in this canvas to change in response to the user shaking their head yes or no. I looked at a couple of different ways of detecting nods and head shakes, but ultimately went with a solution based on this project by Katsuomi Kobayashi.

To use the gesture recognition solution from this project in my own project, I first added the two Rift Gesture files (RiftGesture.cs and MyMath.cs) to my project and then attached the RiftGesture.cs script to the ThoughtBubble.

When you look at RiftGesture.cs, there are two things to take note of. First, you’ll see that to get the head orientation data, it uses:

OVRPose pose = OVRManager.display.GetHeadPose();

Quaternion q = pose.orientation;

This gets the head pose data from the Rift independent of any other input. When I first looked at adding head gestures, I tried using the transform from one of the cameras on the logic that the camera transform follows the head pose. Using the camera transform turned out to be problematic because the transform can also be affected by input from devices other than the head set (keyboard, mouse, gamepad) resulting in detecting a headshake when the user rotated the avatar using the mouse rather than shaking their head. By using OVRManager.display.GetHeadPose(), it ensures you are only evaluating data from the headset itself.

Second, you will also notice that it uses SendMessage in DetectNod() when a nod has been detected:

SendMessage("TriggerYes", SendMessageOptions.DontRequireReceiver);

and in DetectHeadshake() when a headshake has been detected:

SendMessage("TriggerNo", SendMessageOptions.DontRequireReceiver);

The next step I took was to create a new script (conversation.cs) to handle the conversation. This script contains a bit of setup to get and update the text in the canvas and to make sure that the dialog is visible to the user before it changes. (The canvas groups visibility is set by canvas groups alpha property.) However, most importantly, this script contains the TriggerYes() and TriggerNo() functions that receive the messages sent from the RiftGesture.cs. These functions simply update the text when a nod or headshake message has been received. I attached the conversation.cs script to the ThoughtBubble object and dragged the text object from the canvas to the questionholder so that the script would know which text to update.

At this point I was able to build and test my scene and have a quick telepathic conversation with one of the characters.

As an example, I updated the thought bubbles scene I created earlier to allow a silent conversation with one of the people in the scene and this blog post will cover exactly what I did.

In my scene, I used a world-space canvas to create the thought bubble. This canvas contains a canvas group (ThoughtBubble) which contains an image UI object and a text UI object.

|

| Hierarchy of the world space canvas |

To use the gesture recognition solution from this project in my own project, I first added the two Rift Gesture files (RiftGesture.cs and MyMath.cs) to my project and then attached the RiftGesture.cs script to the ThoughtBubble.

When you look at RiftGesture.cs, there are two things to take note of. First, you’ll see that to get the head orientation data, it uses:

OVRPose pose = OVRManager.display.GetHeadPose();

Quaternion q = pose.orientation;

This gets the head pose data from the Rift independent of any other input. When I first looked at adding head gestures, I tried using the transform from one of the cameras on the logic that the camera transform follows the head pose. Using the camera transform turned out to be problematic because the transform can also be affected by input from devices other than the head set (keyboard, mouse, gamepad) resulting in detecting a headshake when the user rotated the avatar using the mouse rather than shaking their head. By using OVRManager.display.GetHeadPose(), it ensures you are only evaluating data from the headset itself.

Second, you will also notice that it uses SendMessage in DetectNod() when a nod has been detected:

SendMessage("TriggerYes", SendMessageOptions.DontRequireReceiver);

and in DetectHeadshake() when a headshake has been detected:

SendMessage("TriggerNo", SendMessageOptions.DontRequireReceiver);

The next step I took was to create a new script (conversation.cs) to handle the conversation. This script contains a bit of setup to get and update the text in the canvas and to make sure that the dialog is visible to the user before it changes. (The canvas groups visibility is set by canvas groups alpha property.) However, most importantly, this script contains the TriggerYes() and TriggerNo() functions that receive the messages sent from the RiftGesture.cs. These functions simply update the text when a nod or headshake message has been received. I attached the conversation.cs script to the ThoughtBubble object and dragged the text object from the canvas to the questionholder so that the script would know which text to update.

|

| Scripts attached to the ThoughtBubble canvas group |

At this point I was able to build and test my scene and have a quick telepathic conversation with one of the characters.

Labels:

gestures,

head tracking,

headshake,

nodding,

oculus rift,

rift,

UI,

unity,

virtual reality,

VR

Friday, January 9, 2015

Unity 4.6: Creating a look-based GUI for VR

In a previous post, I talked about creating GUIs for VR using world space canvases. In that example, the GUI only displayed text - it didn't have any input components (buttons, sliders, etc). I wanted to add a button above each thought bubble the user could click to hear the text read aloud.

As I had used a look-based interaction to toggle the visibility of the GUI, this brought up the obvious question of how do I use a similar interaction for GUI input? And, importantly, how do I do it in a way that takes advantage of Unity's GUI EventSystem?

Turns out, what's needed is a custom input module that detects where the user is looking. There is an excellent tutorial posted on the Oculus forums by css that is a great place to start. That tutorial includes the code for a sample input module and walks you through the process of setting up the GUI event camera. (You need to assign an event camera to each canvas and one twist is that the OVR cameras don’t seem to work with the GUI.) By following that tutorial, I was able to get look-based input working very quickly.

Note that while look-based interactions are immersive and fairly intuitive to use, it is worth keeping in mind that look-based input won’t work in all situations. For example, if you have attached the GUI to CenterEyeCamera to ensure that the user always sees the GUI, the GUI will follow the user’s view meaning the user won’t be able to look at any one specific option.

Note that while look-based interactions are immersive and fairly intuitive to use, it is worth keeping in mind that look-based input won’t work in all situations. For example, if you have attached the GUI to CenterEyeCamera to ensure that the user always sees the GUI, the GUI will follow the user’s view meaning the user won’t be able to look at any one specific option.

Subscribe to:

Comments (Atom)